Every time I upgrade any of my electronic devices, there is a real risk that something in the user interface will change. This is true of not just updating software but also when updating hardware. While someone who is responsible for the update decided the change in the interface is an improvement over the old interface, there is often a jolt as established users either need to adjust to the change or the system provides mechanisms that support the older interface. Following are some recent examples I have encountered.

A certain browser for desktop computers has been undergoing regular automagical updates – among the recent updates is a shuffling of the menu bar/button and how the tabs are displayed within the browser. Depending on who I talk to, people either love or hate the change. Typically it is not that the new or old interface is better but that the user must go through the process of remapping their mental map of where and how to perform the tasks they need to do. For example, a new menu tree structure breaks many of the learned paths, the spatial mapping so to speak, to access and issue specific commands. This can result in a user not being able to easily execute a common command (such as clear temporary files) without feeling like they have to search for a needle in a haystack because their spatial mapping for a command needs to be remapped.

Many programs provide update/help pages to help with this type of transition frustration, but sometimes the update cycle for the program is faster than the frequency that a user may use a specific command, and this can cause further confusion as the information the user needs is buried in an older help file. One strategy to accommodate users is to allow them to explicitly choose which menu structure or display layout they want. The unfortunate thing about this approach is that it is usually an all or nothing approach. The new feature may only be available under the new interface structure. Another more subtle strategy that some programs use to accommodate users is to quietly support the old keystrokes while displaying the newer interface structure. This approach can work well for users that memorized keyboard sequences, but it does not help those users that manually traversed the menus with the mouse. Additionally, these approaches do not really help with transitioning to the new interface; rather, they enable a user to put off the day of reckoning a little longer.

My recent experience with a new keyboard and mouse provides some examples of how these devices incorporate spatial clues to improve the experience of adapting to these devices.

The new keyboard expands the number of keys available. Despite providing a standard QWERTY layout, the relative location of the keys on the left and right edge of the layout was different relative to the edge of the keyboard. At first, this caused me to hit the wrong key when I was trying to press keys around the corners and edges of the keyboard layout – such as the ctrl and the ~ keys. With a little practice, I no longer hit the wrong keys. It helps that the keys on the left and right edge of the layout are different shapes and sizes from the rest of the alphanumeric keys. The difference in shape helps provide immediate feedback of where my hands are within the spatial context of the keyboard.

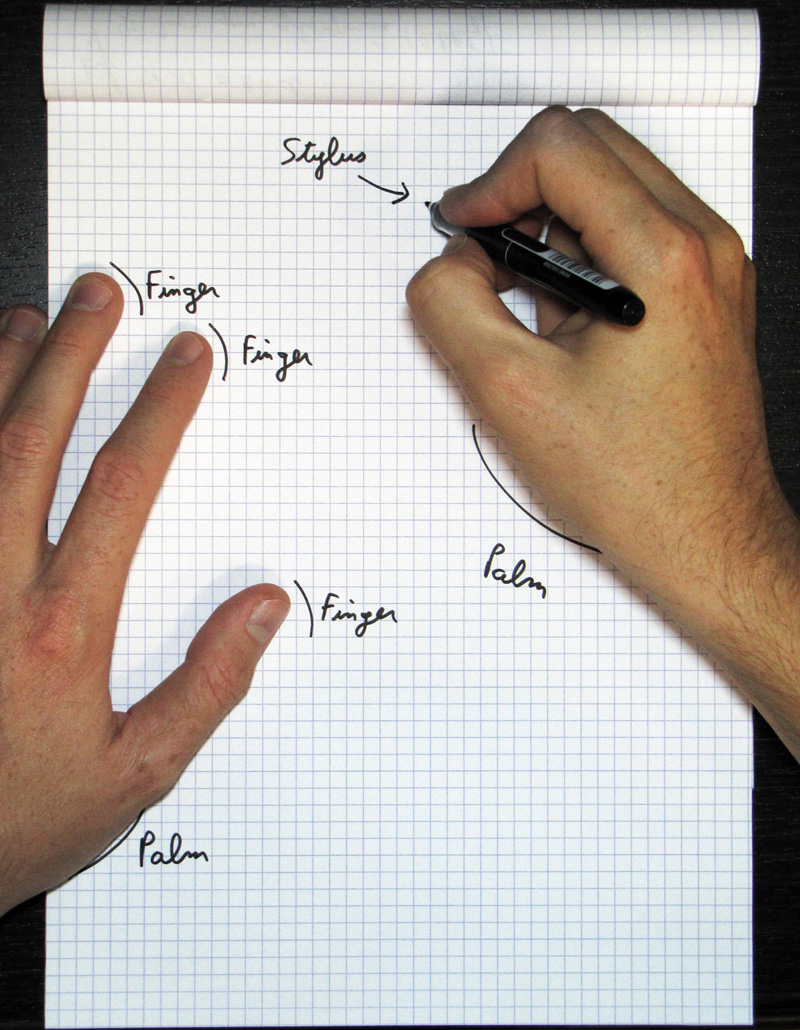

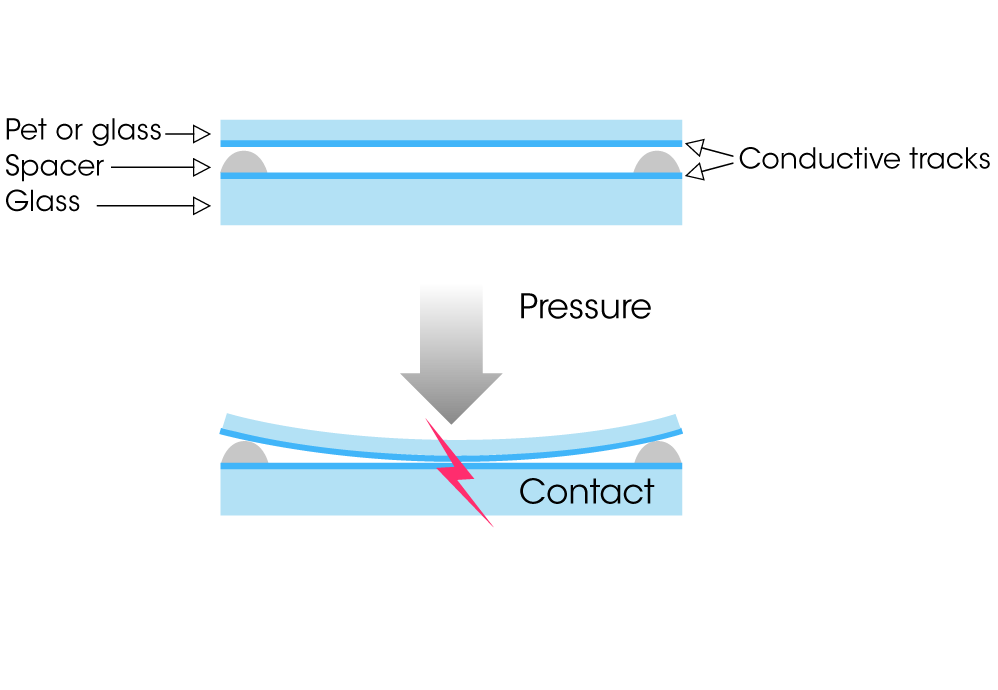

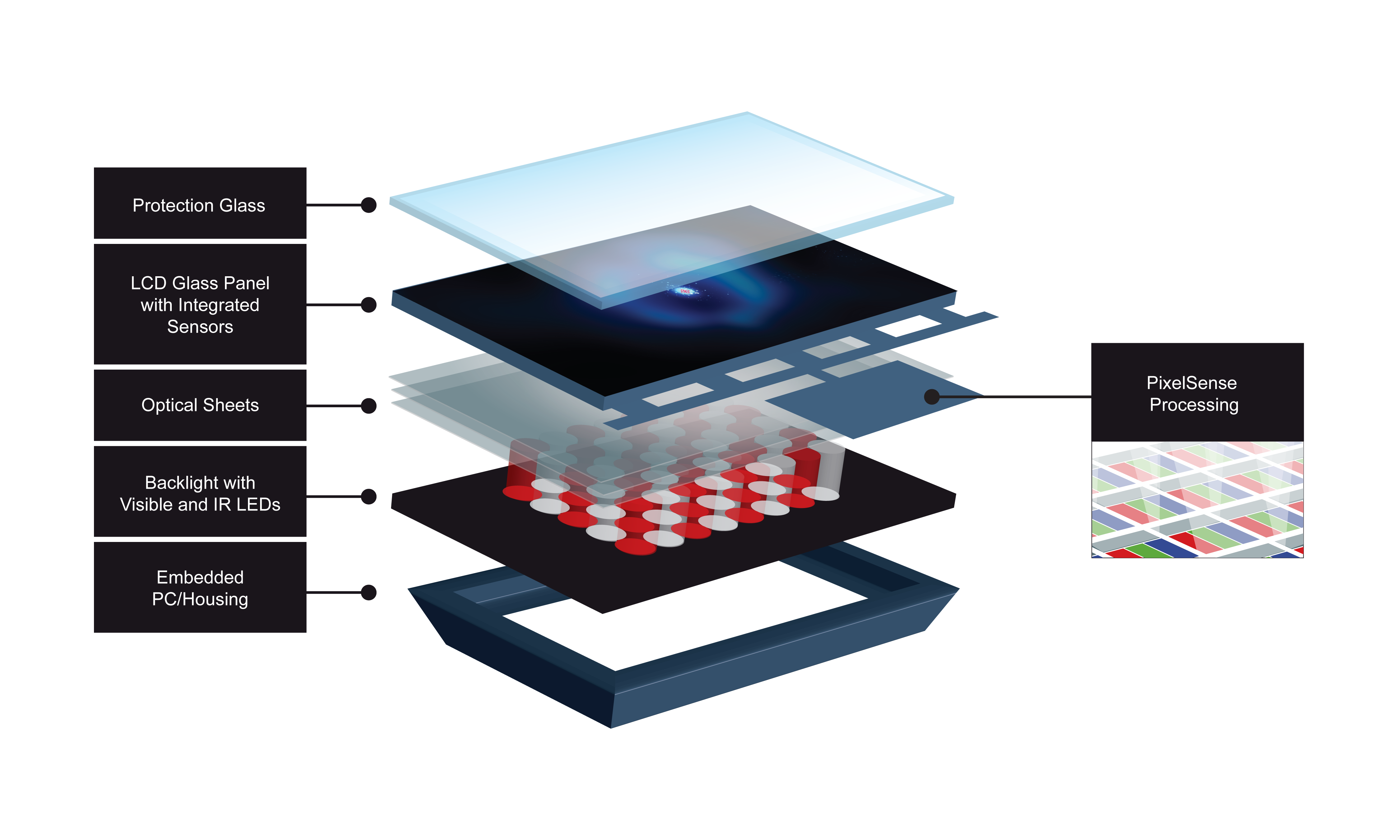

Additionally, the different sets of keys are grouped together so that the user’s fingers can feel a break between the groupings and the user is able to identify which grouping their hands are over without looking at the keyboard. While this is an obvious point, it is one that contemporary touch interfaces are not able to currently accommodate. The keyboard also includes a lighting feature for the keys that allows the user to specify a color for the keyboard. My first impression was that this was a silly luxury, but it has proven itself a useful capability because it makes it possible to immediately and unambiguously know what context mode the keyboard is in (via different color assignments) so that the programmable keys can take on different functions with each context.

The new mouse expands on the one or two button capability by supporting more than a dozen buttons. I have worked with mice with many buttons before, and because of that, the new mouse had to have at least two thumb buttons. The new mouse though does a superior job not just with button placement, but in providing spatial clues that I have never seen on a mouse before. Each button on the mouse actually has a slightly different shape, size, and/or angle that it touches the user’s fingers. It is possible to immediately and unambiguously know which button you are touching without looking at the mouse. There is a learning curve to know how each button feels, but the end result is that all of the buttons are usable with a very low chance of pressing unintended buttons.

In many of the aerospace interfaces that I worked on, we placed different kinds of cages around the buttons and switches so that the users could not accidently flip or press one. By grouping the buttons and switches and using different kinds of cages, we were able to help the user’s hands learn how performing a given function should feel. This provided a mechanism to help the user detect when they might be making an accidental and potentially catastrophic input. Providing this same level of robustness is generally not necessary for consumer and industrial applications, but providing some level of spatial clues, either via visual cues or physical variations, can greatly enhance the user’s learning curve when the interface changes and provide clues when the user is accidently hitting an unintended button.